chatbot and role models

Written By: Anna Liednikova

Our interactions are driven by the social context. In most cases, being in society, we perform some kind of social role, and sometimes several. Since the context of using a particular chatbot, as a rule, remains unchanged, it can be assumed that it also has one role model. But is it?

This has been true for a long time. In general, chatbots can be divided into two main camps: goal-oriented (there is a task, it needs to be solved: order tickets, a hotel, make a diagnosis) and chit-chat (talk about everything and nothing, as long as there is enough resource).

And only in 2017, Zhou Yu, Alan Black and Alexander Rudnitsky presented the first hybrid (Learning conversational systems that interleave task and non-task content). Through their research, they have shown that a system that alternates between social content (chit chat) and task content (goal-oriented) is more successful at accomplishing a task and more attractive than a purely task-oriented (performer).

The main task of the chatbot they presented was to recommend films, but in order for it to cope with the task more efficiently, several communication strategies (from the role of a chit-chat) were added to the main set of functions (the role of the goal-oriented bot):

- Active listening strategies engage users by actively participating in the conversation, such as asking additional questions about the current topic

- Rationale strategies using knowledge from the knowledge base, linking and proposing facts for the current topic

- Personalized strategies - adaptation to the user using automatically extracted information from a conversation with him. For example, offer to talk more about a specific topic, knowing that the user has previously been involved in that topic.

But in general, the development of performers and talkers individually developed faster than together. Nevertheless, the tendency to combine various roles behind the interface of a single system remained.

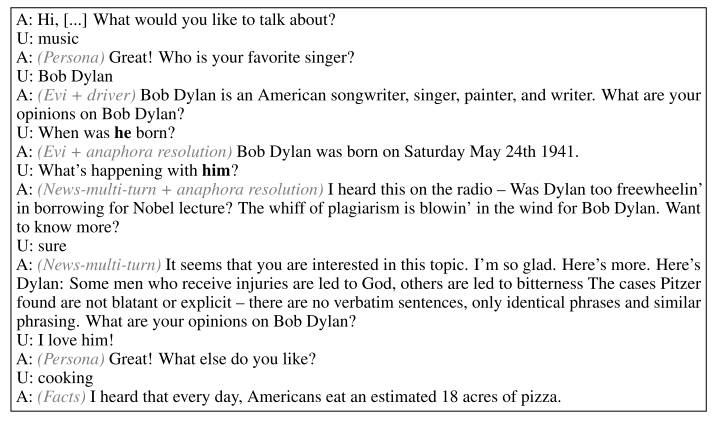

For example, at the end of the same year, the Heriot-Watt University team put forward the social dialogue system [Alana] (https://arxiv.org/abs/1712.07558) (chatterbox) as part of the Alexa Prize. This system combined several roles at once, which are highlighted in gray in the next fragment of the dialogue.

And in October 2020, the first [article] was published (https://dl.acm.org/doi/10.1145/3383652.3423889), which showed that mixing roles did not give positive results. It turns out that the public space and work environment create a completely different context than when you are at home talking face to face with your virtual assistant like Alexa.

And Facebook released a chatbot Blender, which combines the following communication functions:

- Engaging use of personality (PersonaChat)

- Engaging use of knowledge (Wizard of Wikipedia)

- Display of empathy (Empathetic Dialogues)

Although … Hmm … Wait, doesn’t this remind you of all those chatbot’s communication strategies for recommending movies? Yeah, everything is the same, only now the methods are more effective. If earlier it was a limited set of templates, now each strategy is a previously developed chatbot that generates text, being trained on a huge amount of data.

As you can see, the idea of combining various roles and communication strategies is far from new, but it is still very relevant from three sides: 1) development of each role separately, 2) determination of the optimal set of roles for the task, 3) determination of the optimal criterion for selecting a candidate for output to the user.

And you, dear friends, how many roles do you have in your arsenal and how often do you use them? Do you feel that sometimes it’s time to pump up a joker, empath, or arrogant person? How many roles do you allow yourself to mix up in your workplace? And in general, did everyone think about it before?

All Articles

Last updated 2020-11-17 20:01:21 -0400